One of our clients in bioscience was recently seeking a way to use API’s without creating custom Fivetran connectors. The business often needed to complete simple API calls—often hitting only one endpoint. Because of this, a simplified process would save its data engineering teams valuable time. As a Snowflake customer, they were also curious about exploring Snowflake External Functions as a way to pull data from an API directly into the warehouse.

Ideally, the right solution would enable them to:

- Eliminate the need to build custom Fivetran connectors for simple one-off API needs

- Use one lambda function for many API calls

- House as much of the code within Snowflake as possible

The Data Clymer team researched an open source tool called StarSnow to see if it could help our client achieve their goals. In this article, we’ll share what we learned and our recommendations for this client. We hope it’s helpful for other data engineers that want to save time and reduce the number of data tools they use with Snowflake Data Cloud.

What Is StarSnow?

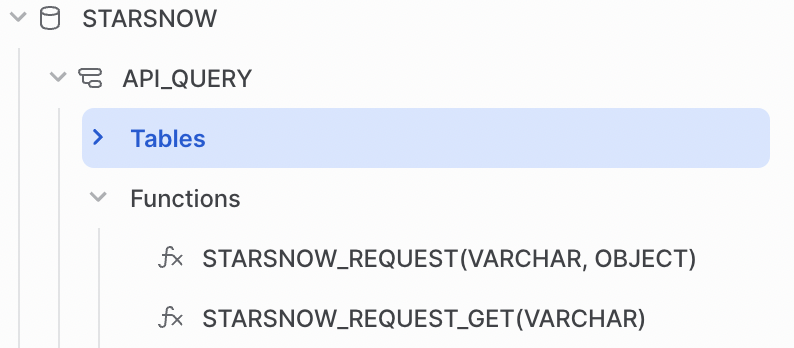

StarSnow is an open source tool which allows you to invoke Rest APIs directly from the Snowflake SQL editor by extending Snowflake’s external function feature. The package includes two functions which allow you to easily interact with web APIs by passing simple SQL statements.

Once installed, the StarSnow functions will be available directly from the Snowflake UI. This enables seamless querying and efficient storage right next to the rest of your data assets.

How StarSnow Works

When deployed with Serverless using the directions below, StarSnow automatically installs everything you need, so that you never need to leave your SQL editor. Additionally, you can use simple SQL queries to store results of the API calls in Snowflake tables.

Note: We recommend using the SnowSight UI with StarSnow, as it will allow you to view any saved functions once deployed.

StarSnow comes with two functions, which allow you to execute either a simple GET function or a customizable set of parameters passed as an object:

STARSNOW_REQUEST_GET(url *VARCHAR*)- Result data type: varchar

- This function takes one URL string argument and returns the content of that web address. It supports only the GET request method and no custom headers.

STARSNOW_REQUEST(url *VARCHAR*, params *OBJECT*)- Result data type: variant

- This function takes a URL and a parameters object that is passed as an Axios configuration for the request and can include other request methods and configurations.

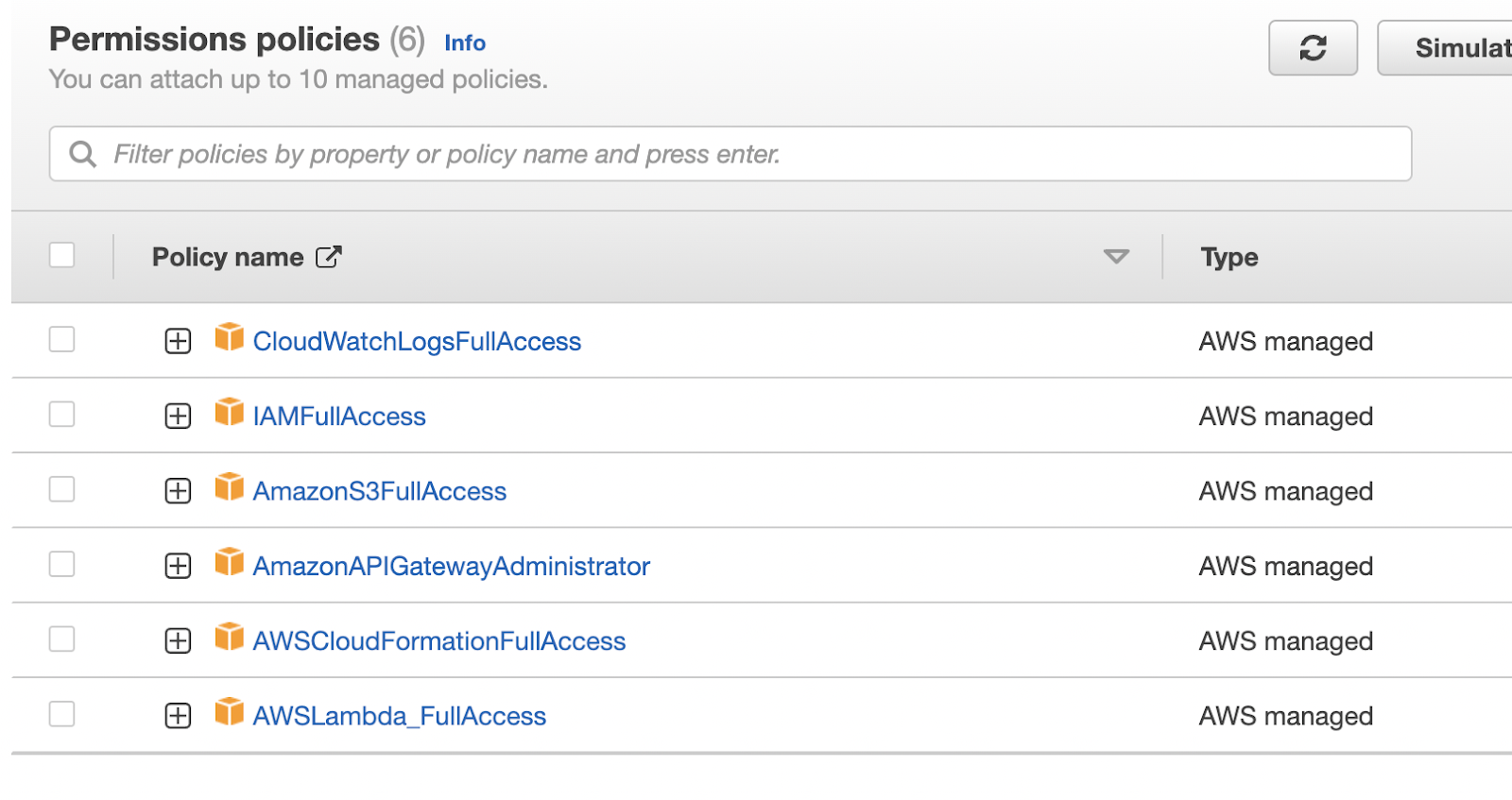

In addition to Snowflake credentials, StarSnow requires you to pass through AWS credentials. This allows the tool to create any necessary lambda functions and S3 buckets to complete the API calls.

The results of either function can be queried using simple SELECT statements, and even combined with data from other Snowflake tables to produce your desired reporting.

How to Deploy StarSnow

Building off of the installation instructions provided in the creator’s Medium article on StarSnow, we tested StarSnow ourselves and found success by following these steps. We recommend installing serverless using NPM. If you don’t already have Node.js on your machine, install it first. You should also already have an AWS and Snowflake account.

- Create new folder on your machine called

starsnow - Install nvm and NPM

- For Mac: run the following command in the terminal:

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.39.0/install.sh | bash - For Windows:

- Install the latest nvm for Windows release

- In the terminal, run the nvm use command followed by either the specific version you want to install or use latest, lts, or newest to get the newest version.

- For Mac: run the following command in the terminal:

- Install Serverless

npm install -g serverless

- Download and compile StarSnow sources

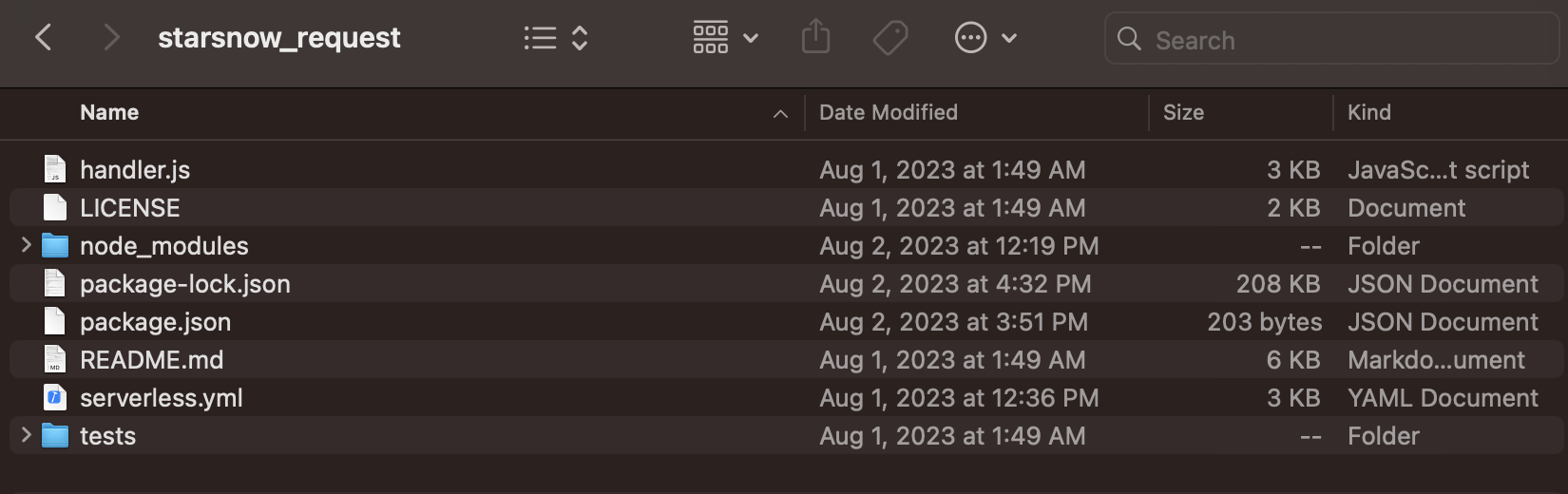

git clone https://github.com/starschema/starsnow_requestnpm install

- Set up the following environment variables (see more details here):

SNOWFLAKE_ACCOUNTSNOWFLAKE_USERNAMESNOWFLAKE_DATABASESNOWFLAKE_PASSWORDSNOWFLAKE_ROLE(make sure that the role hasCREATE INTEGRATIONprivilege)SNOWFLAKE_SCHEMAAWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEY

We used the following AWS user group permissions:

How to Run StarSnow

How to Run StarSnow

You should now see a starsnow_request folder saved to your computer.

cdto this directory and confirm theserverless.ymlfile contains the expected environment variable for Snowflake authentication.- Use an editor to open

serverless.ymland change the provider runtime configuration toruntime: nodejs18.x - Run the Serverless deploy command to install all of the required packages and create the needed resources including lambda function, S3 buckets in AWS, API gateways, and external functions in Snowflake

npm installserverless deploy

- Once deployed, you will be able to run and save StarSnow functions within the specified database and schema, and view them under the functions dropdown in the SnowSight UI.

Recommendations

StarSnow is a great way to make simple API calls with very little overhead. It checks 2 of the 3 boxes our client initially hoped to achieve:

- Eliminates the need to build custom Fivetran connectors for simple one-off API needs

- Houses as much of the code within Snowflake as possible

And what about their third desire: to use one lambda function for many API calls?

While StarSnow still creates one lambda function per API, this is done automatically without requiring any extra work by the developer. So it still results in the valuable time savings the data engineering team had hoped for.

After testing StarSnow on a few public API’s, we believe the tool has great potential. We recommended our bioscience client use it to address their needs, and we encourage other Snowflake customers with a relevant use case to check it out!

For simple projects, it could be a great alternative to help save your data engineering team some time.

Need Data Engineering Expertise?

At Data Clymer, we harness the power of Snowflake Data Cloud to revolutionize how businesses manage and utilize their data. Our expert data engineers leverage Snowflake’s cloud data platform and other modern data stack tools to orchestrate seamless integration and optimization of data pipelines.

Contact us for help with Snowflake or other data engineering and data strategy services.

How to Run StarSnow

How to Run StarSnow